As a security practitioner, I’m interested in how large language models (LLMs) can make me more efficient in my work. But they’re not my focus, so I’m drawn to writing such as Simon Willison’s 2024 retrospective on AI, which is loaded with insights. It’s worth reading the whole thing, but here are my top three takeaways:

Despite being the most popular, GPT-4 isn’t the most advanced LLM—it’s not even in the top 50. I’ll admit that the few times I’ve used an LLM, I’ve defaulted to GPT because it’s just what comes to mind. Since reading this article, I’ve given Anthropic Claude a try, and I’ll have more to say about it in a later post. The point is, a heck of a lot of models are out there now, and it’s worth your time to think about which one is best to use, and that might depend on the use case at hand.

Input is important. Perhaps this one isn’t surprising—it’s the time-honored truism of “garbage in, garbage out.” But the point here is that the data fed to the model by its developers is far less important than what you can feed it in your prompt, which can now be as large as millions of tokens (words, basically, or maybe subwords if the model parses base words and affixes). As Mr. Willison states:

You can now throw in an entire book and ask questions about its contents, but more importantly you can feed in a lot of example code to help the model correctly solve a coding problem.

But perhaps this article’s most important insight of all is that LLMs look easy to use, but they’re hard to use. The friendly chatbot interface, the tv commercials that show typical folks conversing with their phones while cooking, working on their car, etc., can be pretty deceptive.

A lot of vendors seem to have missed this point and have wedged poorly thought-out, on-by-default AI widgets into their products, and because the prompts being supplied to those widgets are pretty weak (e.g., a search query comprising a few words) the output is often comically wrong. I’m sure everyone has noticed this problem with everyday tools like search engines, but it also affects specialized tools such as those we use to analyze threat intelligence.

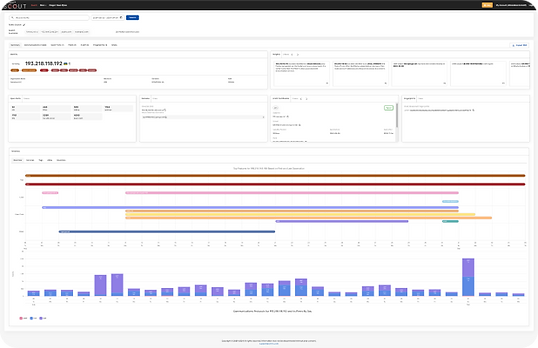

I’ll shamelessly promote my employer, Team Cymru, here—they got it right with the new LLM functionality in Pure Signal Scout (I personally had nothing to do with the work that went into it, but my colleagues who did clearly took a sensible and considered approach). This opt-in feature delivers accurate threat intelligence insight to every query I’ve tried against it, likely because the prompt is so well engineered (see my first takeaway above). Even better, the insights are displayed in a tiered fashion, starting with unobtrusive bite-sized pieces by default, and getting more detailed as you request it through buttons and twist-downs in the interface. So computing resources, screen real estate, and your attention aren’t wasted.

Mr. Willison is one of the few analysts with objective takes on both the strengths and weaknesses of LLMs, and I’ll keep paying attention to his updates.